OVERVIEW

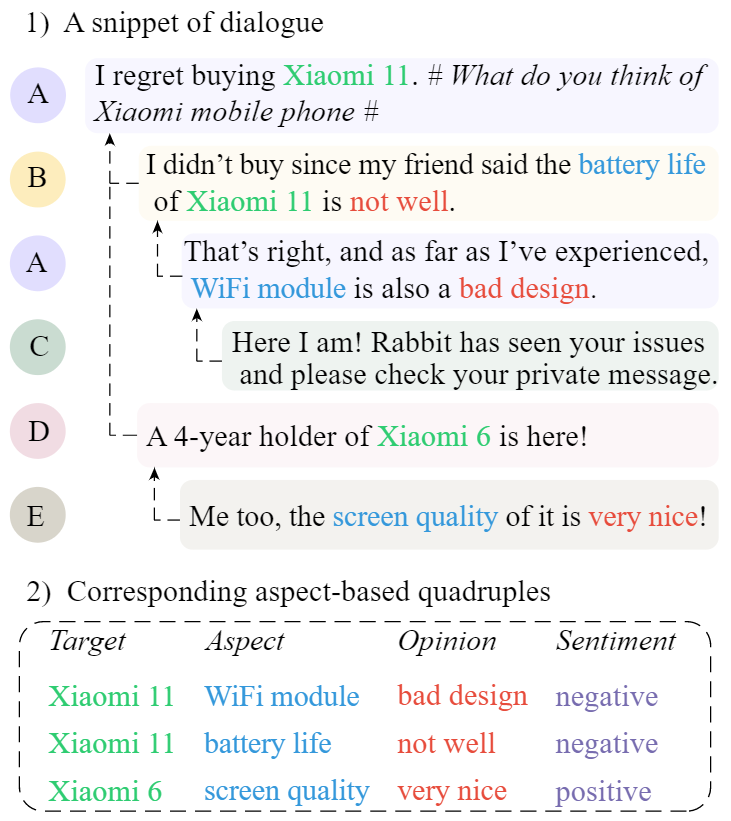

The rapid development of aspect-based sentiment analysis (ABSA) within recent decades shows great potential for real-world society. The current ABSA works, however, are mostly limited to the scenario of a single text piece, leaving the study in dialogue contexts unexplored. We organized the shared task of conversational aspect-based sentiment quadruple analysis, namely ConASQ, aiming to detect the quadruple of {target-aspect-opinion-sentiment} in a dialogue:

Comparing to the standard sentence-level ABSA, ConASQ is more challenging because the sentiment elements in one quadruple may distributed and scattered in different utterances, which thus requires carefully modeling of dialogue discourse features, e.g., speaker role and co-reference. We provide a table-filling baseline method for benchmarking this task. We hope the new benchmark will bridge the gap between fine-grained sentiment analysis and conversational opinion mining, and spur more advancements in the sentiment analysis community. The dataset used for the shared task includes both Chinese and English languages.

INSTRUCTIONS

Data Source

We got the data for our task from posts and replies about electronic products, especially mobile phones, on Sina Weibo. To protect privacy, we remove the user nicknames of each sentence. We organized the posts into conversation trees based on the reply relation. Then we annotated the aspect-based sentiment quadruple in the dialogue. Finally, we now have 1000 dialogues, each containing up to 10 sentences. We also translate the original corpus with Chinse language into English language and project the annotation to obtain a parallel corpus. We random split the dataset into train, valid and test with a ratio of 8:1:1. The overall statistics of our corpus is shown as follows:

| Lang | Set | Dialogue | Utterance | Speaker | Target | Aspect | Opinion | Quadruple |

|---|---|---|---|---|---|---|---|---|

| CH | total | 1,000 | 7,452 | 4,991 | 8,308 | 6,572 | 7,051 | 5,742 |

| train | 800 | 5,947 | 3,986 | 6,652 | 5,220 | 5,622 | 4,607 | |

| valid | 100 | 748 | 502 | 823 | 662 | 724 | 577 | |

| test | 100 | 757 | 503 | 833 | 690 | 705 | 558 | |

| EN | total | 1,000 | 7,452 | 4,991 | 8,264 | 6,434 | 6,933 | 5,514 |

| train | 800 | 5,947 | 3,986 | 6,613 | 5,109 | 5,523 | 4,414 | |

| valid | 100 | 748 | 502 | 822 | 644 | 719 | 555 | |

| test | 100 | 757 | 503 | 829 | 681 | 691 | 545 |

Corpus Sample

- Dialogue: Text content of the conversation.

- Speaker: Speaker of each utterance. The numbers are used internally within the dialogue to differentiate between different speakers.

- Replies: Reply relation in the conversation. The i-th utterance replies to the Ri-th utterance.

Task and Evaluation

Our goal is to extract all quadruples in the dialogue with given replies and speaker information:

Task Formulation:

input : Dialogue, Speaker, Replies

output: Quadruple

To evaluate the performance, we use two metrics: micro-F1 score and iden-F1 score.

The F1 score is computed using precision (P) and recall (R), which are calculated as follows:

P = TP / (TP + FP) R = TP / (TP + FN) F1 = 2 * P * R / (P + R)

where TP, FP, and FN represent specific items that are used to calculate the F1 score In the context of a Confusion_matrix , In particular, when computing the micro-F1 score, TP corresponds to the number of predicted quadruples that match exactly with those in the gold set. On the other hand, for the iden-F1 score, TP counts the number of times the triplets(without considering sentiment polarity) in the prediction match those in the golden set. The FP and FN also vary in the two settings. Overall, we use the average of the two evaluation metrics to measure the performance of the model.

ORGANIZERS

Languag and Cognition Computing Laboratory, Wuhan University

NeXT++ Research Center, National University of Singapore

School of Computing and Information Systems, Singapore Management University